BEST PRACTICES TO SECURE YOUR API

5th January 2022

CES 2022: PlayStation Announces VR 2 Headset And Sense Controllers

12th January 2022The robots.txt file is one of the main ways of telling a search engine where it can and can’t go on your website. All major search engines support the basic functionality it offers, but some of them respond to some extra rules which can be useful too. This guide covers the ways to use robots.txt on your website.

What is a robots.txt file?

Crawl directives

The robots.txt file is one of a number of crawl directives.

A robots.txt file is a text file that is read by search engines (and other systems). Also called the “Robots Exclusion Protocol”, the robots.txt file is the result of a consensus among early search engine developers. It’s not an official standard set by any standards organization; although all major search engines adhere to it.

What does the robots.txt file do?

Caching

Search engines discover and index the web by crawling pages. As they crawl, they discover and follow links. This takes them from site A to site B to site C, and so on. But before a search engine visits any page on a domain it hasn’t encountered before, it will open that domain’s robots.txt file. That lets them know which URLs on that site they’re allowed to visit (and which ones they’re not).

Where should I put my robots.txt file?

The robots.txt file should always be at the root of your domain. So if your domain is www.example.com, it should be found at https://www.example.com/robots.txt.

It’s also very important that your robots.txt file is actually called robots.txt. The name is case sensitive, so get that right or it just won’t work.

Pros and cons of using robots.txt

Pro: managing crawl budget

It’s generally understood that a search spider arrives at a website with a pre-determined “allowance” for how many pages it will crawl (or, how much resource/time it’ll spend, based on a site’s authority/size/reputation, and how efficiently the server responds). SEOs call this the crawl budget.

If you think that your website has problems with crawl budget, then blocking search engines from ‘wasting’ energy in unimportant parts of your site might mean that they focus instead on the sections which do matter.

It can sometimes be beneficial to block the search engines from crawling problematic sections of your site, especially on sites where a lot of SEO clean-up has to be done. Once you’ve tidied things up, you can let them back in.

A note on blocking query parameters

One situation where crawl budget is particularly important is when your site uses a lot of query string parameters to filter or sort lists. Let’s say you have 10 different query parameters, each with different values that can be used in any combination (like t-shirts in multiple colours and sizes). This leads to lots of possible valid URLs, all of which might get crawled. Blocking query parameters from being crawled will help make sure the search engine only spiders your site’s main URLs and won’t go into the enormous trap that you’d otherwise create.

Con: not removing a page from search results

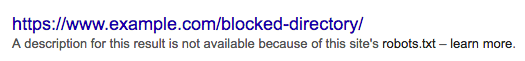

Even though you can use the robots.txt file to tell a spider where it can’t go on your site, you can’t use it to tell a search engine which URLs are not to show in the search results – in other words, blocking it won’t stop it from being indexed. If the search engine finds enough links to that URL, it will include it, it will just not know what’s on that page. So your result will look like this:

noindex tag. That means that, in order to find the noindex tag, the search engine has to be able to access that page, so don’t block it with robots.txt.Con: not spreading link value

If a search engine can’t crawl a page, it can’t spread the link value across the links on that page. When a page is blocked with robots.txt, it’s a dead-end. Any link value which might have flowed to (and through) that page is lost.